Like I said in my previous post, the final part of my project represents the implementation of the actual Sync logic. This is done exclusively by sharing data with the Storage Server. Since Mozilla’s Storage Server does not support push notifications, this is going to require a bit of tinkering from my part in order to make the Sync work correctly.

What you need to know about the Storage Server is that it is essentially a dumb storage bucket – it only does what you tell him. Therefore, most of the complexity of Sync is the responsibility of the client. This is good for users’ data security, but can be bad for people implementing Sync clients 🙂

What else you need to know about the Storage Server is how it stores the data. The base elements are the simple objects so called Basic Storage Objects (BSOs), which are organized into named collections. A BSO is the generic JSON wrapper around all items passed into and out of the Storage Server and is assigned to a collection with other related BSOs (i.e. the Bookmarks collections, the History collection etc).

Among other optional fields, every BSO contains the following mandatory fields:

- id – an identifying string which must be unique within a collection.

- payload – a string containing the data of the record.

- modified – the timestamp at which the BSO was last modified, set by the server.

As for talking to the Storage Server, we just send specific HAWK signed HTTP requests (i.e. GET, POST, DELETE etc) to a given collection endpoint or to a given BSO endpoint.

Since I will only deal with bookmarks sync for the moment, I’ll continue to talk from the bookmarks’ point of view. Maybe now is a good time to state that my current work is highly dependent to Iulian’s work, who is doing the Bookmarks Subsystem Update, therefore I had to rebase his branch into mine so that I can work with the new bookmarks code.

Before a bookmark is uploaded to the server, there are a few steps that we need to take into consideration:

- Serialize. For an object to be serializable, it has to implement GLib’s Serializable Interface, so that’s what we did for EphyBookmark too.

- Encrypt. To be more specific, this is an AES 256 encryption using the Sync Key retrieved from the FxA Server.

- URL safe encode. Since the BSO’s payload is a string that is sent over HTTP, we can’t send the raw encrypted bytes, so we have to base64 url-safe encode it.

Next, we create the BSO with the given id and payload, send it to the server, and set the modified value as returned by the server. Obviously, when downloading a BSO from the server, the steps are going in reverse order: decode -> decrypt -> deserialize.

OK, now that you know how to interact with the Storage Server, back to the Sync logic. I’m not sure if I’ll have time to finish implementing it before GUADEC, maybe I’ll do it there during one of the BOFs, who knows?

However, the actual Sync process should look something along the lines:

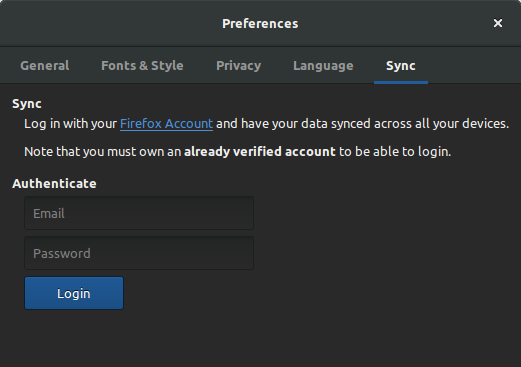

- The user signs in.

- Retrieve the Sync Key from the FxA Server.

- Retrieve the storage endpoint and credentials from the Token Server.

- Merge the local bookmarks with the remote ones from the Storage Server, if any.

- Every time a bookmark is added/edited (re)upload it to the server too.

- Every time a bookmark is deleted delete it from the server too.

- Periodically check the server for changes to the Bookmarks collection. If any, mirror them to the local instance (this is going to prove a bit tricky for deletion, since we can no longer track a BSO that has been deleted).

That’s it for the moment, see you at GUADEC!